Software developers have embraced artificial intelligence tools that have the enthusiasm for kids to discover candies, but they trust production as much as politicians promise.

Google Cloud’s 2025 Dora Report, Released Wednesday, it shows that 90% of developers use AI in their daily work. Since last year.

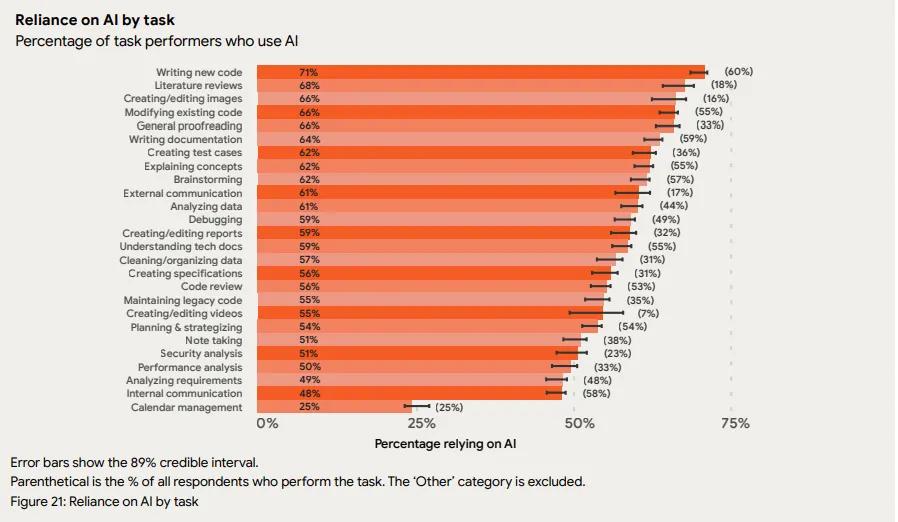

The report also found that only 24% of respondents actually trust the information these tools generate.

An annual survey of nearly 5,000 technology experts around the world draws pictures of an industry trying to move faster without breaking things.

Developers spend a median of two hours each day with their AI assistants and integrate them into everything from code generation to security reviews. However, 30% of these same experts believe that AI output is “a little” or “nothing.”

“If you’re a Google engineer, it’s inevitable that you’ll use AI as part of your daily work,” Ryan Salva, who oversees Google’s coding tools, including Gemini Code Assist, told CNN.

The company’s own metrics show that over a quarter of Google’s new code comes from AI Systems, with CEO Sundar Pichai claiming that the entire engineering team is getting 10% more productive.

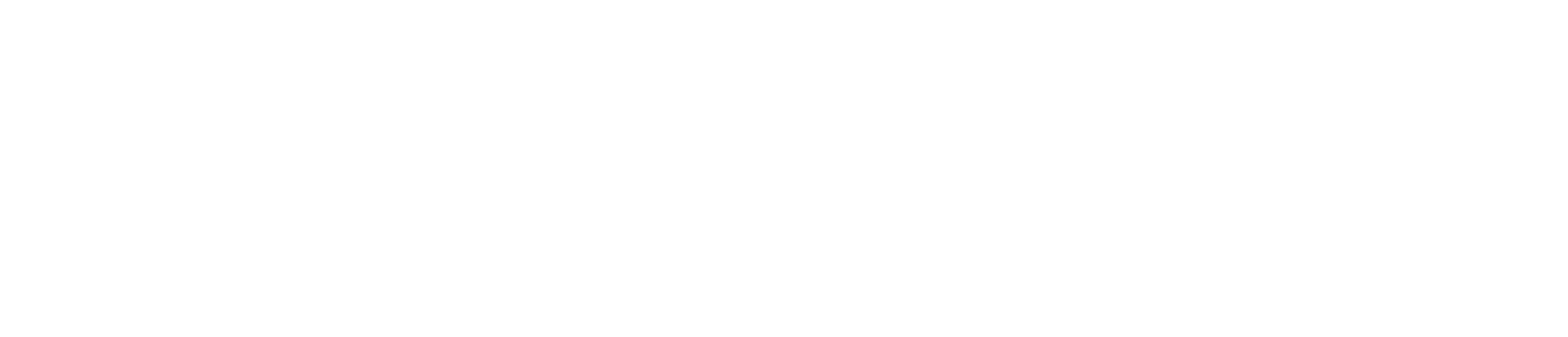

Developers mainly use AI to write and modify new code. Other use cases include reviewing and maintaining legacy code, along with educational purposes such as concept explanations and writing documents.

Image: Google

Despite the lack of trust, over 80% of the developers surveyed reported that AI increased work efficiency, while 59% focused on improving code quality.

However, this is where things become unique. 65% of respondents said they rely heavily on these tools, despite not completely trusting them.

Among the group, 37% reported “moderate” trust, 20% said “many” and 8% admitted “many” dependence.

This reliable productivity paradox This is consistent with the findings from Stack Overflow’s 2025 survey. Mistrust in AI accuracy has increased from 31% to 46% in just one year despite its high prices. Adoption rate of 84% per year.

Developers are good for using AI for brainstorming and grantwork, but everything needs to be double-checked.

Image: Stack overflow

DORA: Promoting AI-Native work

Google’s responses don’t just document trends.

On Tuesday, the company announced its DORA AI feature model. It is a framework that identifies seven practices designed to help organizations leverage the value of AI without taking risks.

This model advocates user-centric design, clear communication protocols, and what Google calls “small batch workflow.” Essentially, it avoids unsupervised and uncontrolled AI operations.

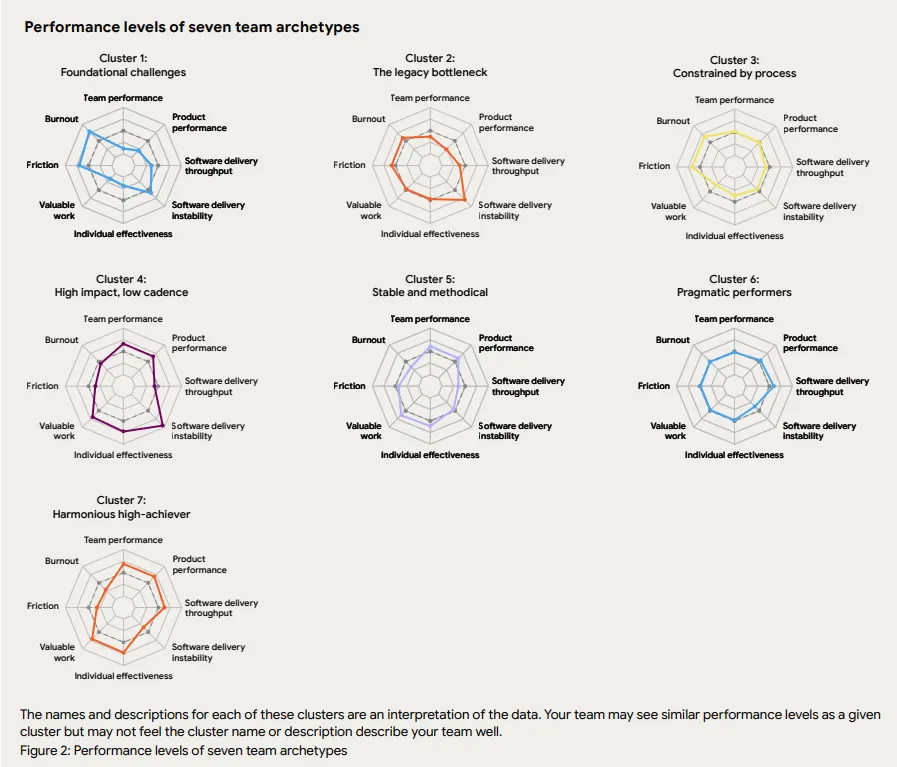

The report also introduces team archetypes, ranging from “harmonious high-chiever” to groups pasted on “legacy bottlenecks.”

These profiles emerged from an analysis of how different organizations handle AI integration. Teams with their existing, powerful processes have seen AI amplify its strengths. Fragmented organizations have seen AI reveal all the weaknesses of their workflow.

Image: Google

The full state of the AI-assisted software development report and companion dr. AI feature model documentation is available from the Research Portal on Google Cloud.

The material includes normative guidance for teams who want to be more proactive and proactive in adopting AI technology.